Azure Fundamentals: Cloud concepts

Entry point into the Azure ecosystem

Azure Fundamentals or so called AZ-900 is an entry point into the Azure ecosystem.

The idea is not to have hands on experience but solid understanding of cloud concepts, what Azure has to offer and pricing.

Technical IT experience is not required.

As a cloud computing platform, Azure provides variety of services.

Let’s apply what we learned in Azure Developer Certification Roadmap and build some structure in our learning.

Let’s navigate to Azure Fundamentals Certification page and explore.

There we see what is expected from us, what skills are measured. To find more details we should visit AZ-209 Study guide where we can observe skills at a glance, deeper insight of what’s expected behind these topics covered and study resources.

These are the focus areas:

| Focus area | Weight |

|---|---|

| Describe cloud concepts | 25–30% |

| Describe Azure architecture and services | 35–40% |

| Describe Azure management and governance | 30–35% |

After getting an insight into what this certification path gives us. We should focus on covering the study resources provided. Entry point would be Microsoft provided course with different modules or paths.

In the course each domain area maps to a learning path.

Along the way we should consult official Azure docs for more comprehensive understanding and other resources available.

You can go through the materials on your own, the way I suggested. I will be covering those aspects here as well from different angles with easily relatable examples.

Let’s proceed with the rest of this post where we will be covering cloud concepts or first domain area in the preparation course.

Concepts of Cloud computing

Let’s start from a simple scenario to which anyone can relate to. There are so many computers around us. What was hard to imagine is a reality that we live in.

Let’s consider a scenario where we would host a website, Web Shop. We can do it on our own computer. With computer nowadays we can consider anything with processing power, memory, network and storage capacity. But since we want other people to access our website, we would need to expose it to the internet.

We might also go for an option of building our own data center? Or rise up in the clouds.

Scenario: Hosting a Web Shop

Before we make decisions we need to know what are the requirements.

Let’s assume the following:

- Performance (fast load time, smooth navigation, quick checkout process)

- Scalability (ability to handle traffic spikes during sales and promotions, able to increase the user base and product catalog)

- Availability (high uptime, minimal downtime, redundancy to handle failure)

- Security (confidentiality of the data, protecting customer data as personal info, payment details.., secure transactions)

- Cost efficiency

- Initial cost: relatively low

- Ongoing cost: Low, but limited by scalability and availability issues

- Maintenance and Management

- Easy to update and maintain the system

- Minimal need for specialized IT staff

Options 1: Hosting on a Personal Computer

We would need to invest in an infrastructure that can support the requirements. In simple words we need to buy a computer with certain characteristics able to satisfy the requirements.

Something as mid-to-high-end multi-core CPU to handle web requests, 16/32GB of RAM to manage multiple user sessions and server processes and some kind of fast access storage SSD (e.g. 1TB) to improve load times.

Performance: Sufficient for basic operations but may struggle with high concurrency.

Scalability: Can only handle a small number of concurrent users before performance degrades. Upgrading involves significant manual hardware changes.

Availability: Single Point of Failure**.** If the computer crashes, the web shop goes offline. No built-in redundancy.

Security: Basic. Requires manual setup of security measures (e.g., firewalls, SSL certificates). Limited protection against sophisticated attacks.

Cost Efficiency:

- Initial Cost: Relatively low, mainly the cost of the computer and initial setup

- Ongoing Cost: Low, but limited by scalability and availability issues.

Maintenance and management:

- High: Requires regular backups, updates, and manual maintenance. User needs to handle all aspects of IT management.

Options 2: Hosting in our own server room (basically bunch of computers)

Compared to the initial scenario with personal computer, there are quite some things we can improve.

By investing more in an infrastructure we can make an improvement in other non-functional requirements. We can improve the computing power by investing in more servers. To increase our product catalog we would need additional storage. As well we need to invest in network and security. Also cooling and maintenance.

Performance: Able to handle more concurrent users and complex operations.

Scalability: Moderate. By adding more servers and upgrading hardware as needed we improved. Although requires significant time and investment.

Availability: We can implement redundant systems (e.g., failover servers, uninterruptible power supply for power outages). Better than a single computer but still limited to local infrastructure.

Security: More robust security setup with dedicated hardware firewalls, intrusion detection systems, and secure facilities.

Cost Efficiency:

- Initial Cost: High, includes cost of servers, networking equipment, physical space, and initial setup.

- Ongoing Cost: High, includes power, cooling, maintenance, and potential upgrades.

Maintenance and Management:

- High: Requires a dedicated IT team for maintenance, updates, and troubleshooting. Physical presence needed for hardware issues.

Option 3: Hosting in the Cloud

Basically data center built and managed by third party exposes resources for us over the internet.

Performance: Consistently high performance, can handle high traffic and complex operations with dynamic resource allocation.

Scalability: High. Instantly scalable resources to handle traffic spikes. Auto-scaling features adjust resources dynamically.

Availability: High. Built-in redundancy and high availability. Data replicated across multiple geographic locations. SLAs often guarantee 99.99% uptime.

Security: Advanced. Cloud providers offer comprehensive security features, including encryption, DDoS protection, compliance certifications, and continuous monitoring.

Cost Efficiency

- Initial Cost: Low, no need for significant upfront investment in hardware.

- Ongoing Cost: Pay-as-you-go model, costs scale with usage. Can optimize costs by choosing appropriate instances and scaling down during low demand.

Maintenance and Management: Low: The cloud provider handles hardware maintenance, updates, and security. Focus remains on application development and management.

In short:

- Personal Computer: Limited performance, poor scalability, high maintenance, and single point of failure. Suitable only for very small-scale, low-traffic operations.

- Server Room: Better performance and scalability with improved availability and security, but requires significant investment and ongoing maintenance. Suitable for medium-scale operations with a dedicated IT team.

- Cloud Computing: Excellent performance, scalability, and availability with advanced security. Cost-effective with a pay-as-you-go model, and minimal maintenance required. Ideal for growing businesses or those with variable traffic patterns.

There isn’t a silver bullet. It’s crucial to carefully consider your specific requirements, future growth plans, and the resources at your disposal before making a decision.

While there is no one-size-fits-all solution, cloud computing provides us with great flexibility, stands out for its ability to adapt to changing needs and scale seamlessly, making it an attractive option for many businesses.

Pricing (Consumption) model

When comparing IT infrastructure models, there are two types of expenses to consider:

- Capital expenditure (CapEx)

- Operational expenditure (OpEx).

CapEx is typically a one-time, up-front expenditure to purchase or secure tangible resources. Investing into our personal computer, building a data center, buying a car.

OpEx is spending money on services or products over time. Renting a car, using Netflix or a subscription based license for some software.

Cloud computing falls under OpEx as it operates on consumption-based or pay-as-you-go model.

We don’t pay for the physical infrastructure, electricity, building personal, staff or anything associated with a datacenter. Only resources that we use.

We pay while using, once we stop we don’t pay.

Benefits of a consumption or pay-as-you-go model:

- paying only for the resources that we consume and when we consume

- no upfront costs

- no need to invest in building and managing costly infrastructure

- scaling is easy - In traditional model where we invest in infrastructure to cover the peak cases as promotions and discounts, surge of users and we still need to keep and maintain that infra - although it might not be fully utilized. Compared to that in cloud computing consumption model we can scale computing and storage power easily and pay only when we need it.

This helps businesses:

- Plan and manage your operating costs.

- Run your infrastructure more efficiently.

- Scale as your business needs change.

Cloud model types

Based on the needs, traditional data centers have evolved into different directions, different models.

The main three types of cloud computing models are public cloud, private cloud, and hybrid cloud.

Public cloud - owned and run by third-party cloud service providers like Microsoft, Amazon, Google. They manage and provide resources via the internet.

Private cloud - similar to traditional model, it can hosted and managed by us or by third party vendor, typically on-premise but exclusively for a single user/customer.

Hybrid cloud - computing environment that combines private with public cloud, allowing data and applications to be shared between them.

There is also a term Multi-cloud referring to a use case where we’re not vendor-locked to a single cloud provider but instead using services from multiple.

| Public cloud | Private cloud | Hybrid cloud |

|---|---|---|

| No capital expenditures to scale up | Organizations have complete control over resources and security | Provides the most flexibility |

| Applications can be quickly provisioned and deprovisioned | Data is not collocated with other organizations’ data | Organizations determine where to run their applications |

| Organizations pay only for what they use | Hardware must be purchased for startup and maintenance | Organizations control security, compliance, or legal requirements |

| Organizations don’t have complete control over resources and security | Organizations are responsible for hardware maintenance and updates |

ref: Microsoft

Within these deployment models there are the certain service types. Let’s cover them in the next section.

Cloud service types

Let’s briefly look into the model that Microsoft provides

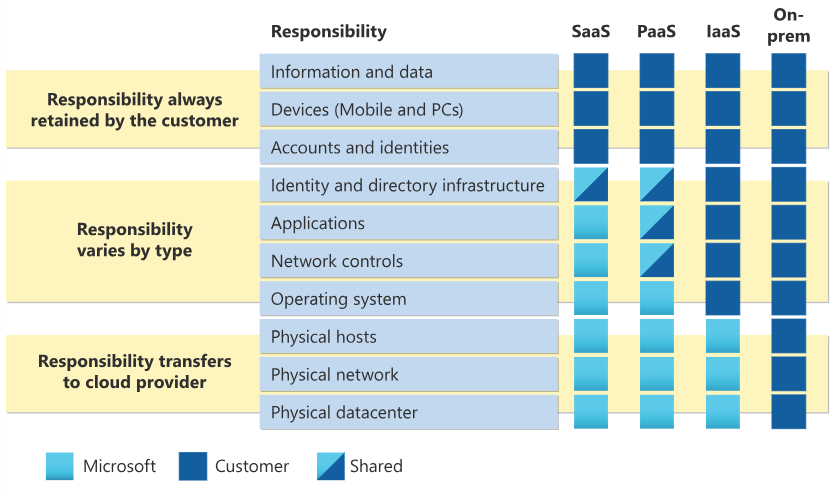

With an on-premises datacenter, you’re responsible for everything. With cloud computing, those responsibilities shift. The shared responsibility model is a fundamental concept in cloud computing that outlines the division of responsibilities between the cloud service provider and the customer.

On the diagram above you can clearly see how responsibilities are divided based on the service type.

There are the following cloud service types:

- IaaS - infrastructure as a service

- PaaS - platform as a service

- SaaS - software as a service

Infrastructure as a Service (IaaS)

In IaaS infrastructure is offered by a cloud provider as a service. So instead of maintaining servers, storage, network, cooling etc. on our own we would on a pay-as-you-go model rent that from the cloud provider. In this model they also provide other services such as logging, monitoring, backups, disaster recovery.

Virtualization layer is built on the top of the infrastructure and that enables us to pay and use resources only when we need and drastically reduce costs.

Virtualization uses software to create an abstraction layer over computer hardware, enabling the division of a single computer’s hardware components—such as processors, memory and storage—into multiple virtual machines (VMs). Each VM runs its own operating system (OS) and behaves like an independent computer, even though it is running on just a portion of the actual underlying computer hardware. IBM

Platform as a Service (PaaS)

In PaaS, platform is offered as a service.

In this model we have infrastructure handled fully by the cloud provider. Virtualized and provided to us. On top of that cloud provider provides us with more managed services such as OS, runtime, databases etc. We focus on developing and managing our application.

In this model we usually pick

- what kind of infrastructure we need

- which OS we want to run on top of that

- runtime

- storage

- where we want it hosted (based on our users)…

- optionally setup backup, disaster recovery option

- …

And everything is managed by the cloud provider. We focus on developing our application, deploying it to that infrastructure and managing it.

Software as a Service (SaaS)

In SaaS software is offered across the internet. Usually through some subscription model. Some of the examples are Microsoft Office 365, Gmail, Dropbox, Netflix etc

We don’t worry about underlying infrastructure, platforms, or development.

There are multiple benefits of this model:

- Cost - since it’s a shared model, meaning that we and the other tenants share the same infrastructure (remember virtualization) we get to have a service much cheaper compared how that would cost in a traditional data center

- Availability - service will not just be highly available but also available anywhere thanks to global distribution of data centers

- Scalability - whenever we need more resources on which software that we consume depends on they’re provisioned and available for us

And there is another service type that emerged, called FaaS.

Function as a Service (FaaS)

Very similar to PaaS where we had to only think about developing, deploying and managing our application. Here we go step further where we get on the level of a function.

This brings us much more flexibility and reduces so much of a unnecessary work around the setup and build of an application. It’s useful in a scenarios where we need to handle a particular task.

We will talk about them more in the future but in short they take some data as input, do some processing. They can be connected to some other services (such as storage, database, event system..) where they can read or write data. And optionally return some results. They can be even connected to other functions and enable us to create complex scenarios. Ideal for async tasks.

Benefits of this model include:

- High availability - they can be spread across different availability zones and regions

- Scalability - they can scaled both manually and elastically based on the traffic changes

- Cost - there is a consumption model, where we pay per execution (spoiler alert: Azure offers a 1 million requests per month)

Benefits of cloud computing

Microsoft points out that two of the biggest considerations when building and deploying cloud applications are uptime (or availability) and the ability to handle demand (or scale).

Availability (also referred as up time)

Example: in an example of a web shop it refers to the availability of it. That our users can always access it without interruptions. To access it from different locations seamlessly with minimal latency, even in peak periods.

When renting a service from a cloud provider they have certain SLA (service level agreement) assigned to it. That’s formal agreement between us and our service provider, their guarantee that the service will be available for the specified amount of time (usually 99.95% / 99.9%).

Example that Microsoft gave is that service that is available for:

| SLA | Possible unavailability time |

|---|---|

| 99% | can be unavailable for 1.6h per week, with down time of 7.2h (432min) per month |

| 99.9% | can be unavailable for 10min per week, with down time of 43.2 min per month |

100% is not easy to archive due to maintenance, upgrades or some unpredictable events.

They’re basically signing an agreement with us and often in case when that is not respected they compensate that by credits (basically money) that we can use on their platform.

Scalability - actually working on scalability increases the availability of our application.

Example: Since we want our application to be there for our users always, even in peak periods (like discounts, promotions, think of Black Friday), machine that hosts our application needs to be able to handle so many requests and load that comes with that. Also we might want to increase our catalog and user base, we need to store that somewhere.

To be able to do these we either invest in a bigger/more powerful machine, upgrade the existing one or we buy more machines and distribute the load among them.

That process is called scaling.

Scenario where we invest in one machine, make it more powerful by adding more CPUs, more memory etc - we call vertical (often referred as in) scaling.

And the other scenarios where we buy more machines and distribute the load among them we call horizontal (out) scaling.

What cloud computing gives us is a benefit of not paying for the service/resources that we don’t need so we can scale up and down to meet the increasing demand and pay only for what we consume.

Reliability - ability of a system to perform it’s function without failures and the ability to recover from failure. This refers to how consistent the system is and how predictable behavior it has under expected conditions. One of the important aspect of reliability is fault tolerance - system’s ability to continue operating correctly even if some of his components fail.

Example: A reliable online banking system consistently processes transactions accurately, updates account balances correctly, and generates precise statements without errors.

Predictability - in terms of costs, performance, availability, scalability… ensuring that we can anticipate how the cloud infrastructure will support our applications and workloads under varying conditions.

Examples:

- Cost - Usually cloud providers have cost calculators that can help you calculate (estimate) the costs based on different inputs. In case of Azure you can visit Pricing Calculator | Microsoft Azure Also there are ways where we can monitor our resources in real-time and adjust our resources if needed. We can also use this to predict our future costs.

- Availability - SLAs already mentioned in the context of availability.

- Scalability - there are elastic options where service can scale based on different metrics.

- Performance - having options to select machines that are designed to be performant as they come with guaranteed number of CPUs, RAM and storage IOPS (Input/Output Operations Per Second).

Security & governance -here we talk about data protection, privacy, compliant requirements, vulnerabilities, treat detection…

Azure provides tools and services that helps us meet these. Certain things depend on the operating model.

For instance if we talk about data protection and privacy we can host our data within the region where we’re compliant (GDPR for instance). Or if we want to protect our data against unauthorized access we can apply RBAC (role based access control) where we can assign roles and permissions and let only users that should access data to access it. Azure also ensures that the resources that we deploy are compliant and provides us with cloud-based auditing that flags these uncompliant resources and mitigation strategies.

If we go with IaaC operating model we get the physical resources but the OS, patches, security or other updates and other types of maintenance we would need to handle. On the other side with PaaS that is taken care of for us.

Azure also provides us with encryption mechanisms where data can be encrypted both at rest and transit and secure network and ways to handle treats like DDoS etc.

Coming up next

I hope you enjoyed - in the next post we continue our journey with the order same as in Microsoft Learn. We will be covering Azure Architecture and various services it offers.

Stay tuned :)